- Center for Research Computing Data

- Current Users

Current Users

Welcome to the NIU HPC facilities, which are powerful tools for study and research. To start, please pay attention to the vital information on this page.

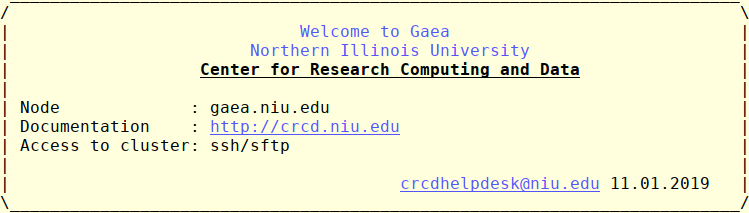

The new NIU compute cluster named Metis (also the Greek goddess of good counsel, planning, cunning and wisdom) was commissioned in September 2023. Therefore, the new accounts are expected to use Metis. All users of our older system, Gaea, are welcome to copy their projects to the Metis but can continue to use Gaea to complete the current tasks.

Both systems are very similar in architecture; current users can use Gaea accounts and passwords to access Metis, and the Gaea documentation pages are compatible with the Metis system. However, there are several essential differences listed below:

- the login node for Metis is metis.niu.edu

- we recompiled Gaea's RHEL7 software modules under the RHEL8 OS installed at Metis:

login to metis.niu.edu and run "module avail" to list the supported packages - the projects folders at Metis should be placed at the /lstr/sahara file system

- gaea's /home, /data1 and /data2 filesystems are not mounted at Metis; to transfer files from gaea.niu.edu use the example below

@gaea ~] ssh -Y username@metis.niu.edu@metis ~]$rsync -av username@gaea.niu.edu:/home/username/ /home/username/

For every project folder at Gaea's /data1 and /data2 disks@metis ~]$rsync -av username@gaea.niu.edu:/data1/projectName/projectFolder /lstr/sahara/projectName/ - the batch job examples for Metis can be copied from /home/examples/examples-metis

- Metis cluster status can be seen via the Ganglia monitor

The login nodes - metis.niu.edu and gaea.niu.edu

These nodes work as gateways between a user and the rest of the systems. These are the only cluster computers accessible from public networks. They are shared between all users and provide tools to run software installed on the clusters and to develop custom applications. While powerful, the login nodes have limited resources and should be used responsibly:

- only run any application interactively for development or testing purposes.

Any production jobs should be submitted via the batch system. We impose a combined limit on the use of the login node resource usage, and any process exceeding 30% of available CPUs or memory will be killed after 30 min of the run time. If more extended interactive tests are necessary, please request an interactive batch system job

Batch system

The PBS batch system manages all compute (a.k.a "worker") nodes. The batch system allows reserving a part of cluster nodes to run a particular application (a job). The more resources the job requires, the larger the cost of a user mistake:

- always submit several short test jobs before long, massive production runs.

The default unrestricted batch queues on Metis and Gaea allow correspondingly 6 and 12 jobs to run simultaneously. Still, there are no limits on the number of submitted jobs, which will be scheduled to start when possible. Additionally, jobs can be routed (via the amount of requested walltime, number of nodes and/or processors, etc.) to special queues allowing more jobs to run. The total number of jobs allowed to run simultaneously is 512 on Metis and 60 on Gaea. To list available queues, use "qstat -q" command from the login nodes.

Disk space

The accessible disk space is configured to be used as described below -

- /home/$USER - the user's home area, the initial quota is 25 GB. Please keep the smaller files here (documents, configuration scripts, code archives). To obtain information about disk space usage, use the

"quota -s"command from the login node. - /data1/$PROJECT/$USER, /data2/$PROJECT/$USER at Gaea and /lstr/sahara/$PROJECT/$USER at Metis - user's project areas, to store input and output (potentially significant) datasets required or produced via batch jobs. The quota is set via project needs; please discuss the required disk space with us.

Backup policy

We only keep a previous day snapshot of /home folders at the Metis cluster, available from metis.niu.edu via /nfs/ihfs/home_yesterday. We strongly encourage users to use personal GitHub repositories for code development and frequently backup essential data and results to remote locations.

Getting Started Tutorials

- Documentation

- Resource Monitors

- Help